The human auditory system is a marvel of biological engineering, capable of discerning intricate patterns in sound waves and translating them into meaningful perceptions. Among the many fascinating aspects of this system is the concept of structural hearing—the ability to perceive and interpret the underlying architecture of sound. This phenomenon goes beyond mere pitch or volume; it involves the brain's capacity to organize auditory information into coherent structures, much like how we perceive visual forms or spatial relationships.

Structural hearing plays a crucial role in how we experience music, language, and even environmental sounds. When listening to a symphony, for instance, we don’t just hear individual notes but rather the interplay of melodies, harmonies, and rhythms that form a cohesive whole. This ability to detect patterns and hierarchies in sound is what allows us to appreciate the complexity of a musical composition or follow the nuances of a spoken conversation in a noisy room.

Recent research in neuroscience and psychoacoustics has shed light on the mechanisms behind structural hearing. Studies suggest that the brain employs predictive coding to anticipate upcoming sounds based on established patterns. This predictive ability is rooted in our evolutionary need to quickly identify and respond to auditory cues, whether it’s the rustle of leaves signaling potential danger or the cadence of a friend’s voice conveying emotion. The auditory cortex, in collaboration with other brain regions, continuously constructs and refines mental models of sound structures, enabling us to make sense of our acoustic environment.

One of the most compelling aspects of structural hearing is its role in music perception. Musicians and composers have long exploited the brain’s propensity for pattern recognition to create works that resonate emotionally and intellectually. From the repetitive motifs in classical music to the layered textures of electronic compositions, structural hearing allows listeners to engage with music on a deeper level. Even in the absence of formal training, people intuitively grasp the "grammar" of music, such as the resolution of a dissonant chord or the satisfying conclusion of a melodic phrase.

Language, too, relies heavily on structural hearing. Phonemes, syllables, and words are organized into syntactic structures that convey meaning. The brain’s ability to parse these structures in real time is nothing short of remarkable. Consider how effortlessly we can distinguish between homophones like "there" and "their" based on context, or how we can follow a rapidly spoken sentence despite overlapping sounds. This efficiency underscores the brain’s expertise in extracting and processing structural information from auditory input.

Environmental sounds present another intriguing dimension of structural hearing. The rustling of leaves, the hum of traffic, or the chirping of birds—all these sounds are processed not as isolated events but as part of a larger acoustic landscape. The brain categorizes and prioritizes these sounds based on their relevance, allowing us to focus on what matters while filtering out background noise. This selective attention is a testament to the adaptive power of structural hearing in navigating everyday life.

Disruptions to structural hearing can have profound implications. Conditions like amusia, or "tone deafness," highlight the fragility of this system. Individuals with amusia struggle to perceive musical structure, often finding it difficult to recognize melodies or detect pitch changes. Similarly, auditory processing disorders can impair the ability to parse speech in noisy environments, underscoring the importance of structural hearing in communication. Understanding these conditions not only advances our knowledge of auditory perception but also informs therapeutic interventions.

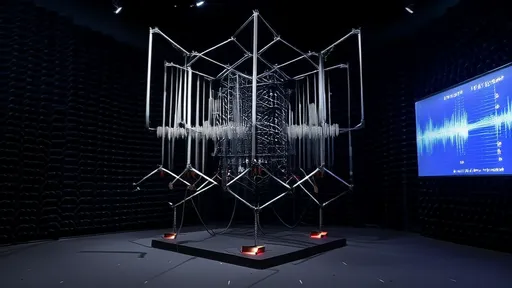

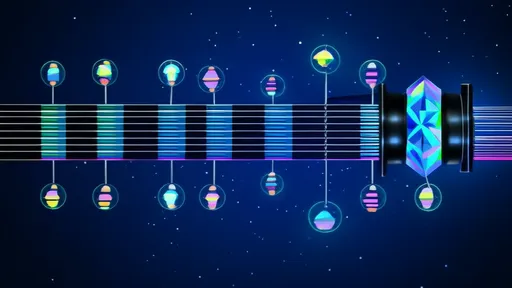

Technological advancements are also reshaping our understanding of structural hearing. Machine learning algorithms, for example, are being trained to mimic human auditory perception, with applications ranging from speech recognition to music composition. These developments offer exciting possibilities but also raise questions about the uniqueness of human hearing. Can a machine truly replicate the nuanced, context-dependent nature of structural hearing, or is it merely approximating the surface-level features of sound?

As research continues to unravel the complexities of structural hearing, one thing becomes clear: this ability is fundamental to our experience of the world. It shapes how we connect with others, how we derive pleasure from music, and how we interpret the sounds around us. Whether through the lens of neuroscience, psychology, or technology, the study of structural hearing reveals the extraordinary capabilities of the human brain and its enduring fascination with the architecture of sound.

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025

By /Jul 25, 2025